Datasets

- CVPR 2023: 5th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- ECCV 2022: 4th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- CVPR 2022: 3rd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- ICCV 2021: 2nd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- Synthesizing Coupled 3D Face Modalities by TBGAN

- Face Bio-metrics under COVID (Masked Face Recognition Challenge & Workshop ICCV 2021)

- First Affect-in-the-Wild Challenge

- FG-2020 Competition: Affective Behavior Analysis in-the-wild (ABAW)

- Aff-Wild2 database

- First Faces in-the-wild Workshop-Challenge

- In-The-Wild 3D Morphable Models: Code and Data

- Sound of Pixels

- Lightweight Face Recognition Challenge & Workshop (ICCV 2019)

- Audiovisual Database of Normal-Whispered-Silent Speech

- Deformable Models of Ears in-the-wild for Alignment and Recognition

- 300 Videos in the Wild (300-VW) Challenge & Workshop (ICCV 2015)

- 1st 3D Face Tracking in-the-wild Competition

- The Fabrics Dataset

- The Mobiface Dataset

- Large Scale Facial Model (LSFM)

- AgeDB

- AFEW-VA Database for Valence and Arousal Estimation In-The-Wild

- The CONFER Database

- Special Issue on Behavior Analysis "in-the-wild"

- Body Pose Annotations Correction (CVPR 2016)

- KANFace

- MeDigital

- FG-2020 Workshop "Affect Recognition in-the-wild: Uni/Multi-Modal Analysis & VA-AU-Expression Challenges"

- 4DFAB: A Large Scale 4D Face Database for Expression Analysis and Biometric Applications

- Affect "in-the-wild" Workshop

- 2nd Facial Landmark Localisation Competition - The Menpo BenchMark

- Facial Expression Recognition and Analysis Challenge 2015

- The SEWA Database

- 300 Faces In-The-Wild Challenge (300-W), IMAVIS 2014

- Mimic Me

- MAHNOB-HCI-Tagging database

- 300 Faces In-the-Wild Challenge (300-W), ICCV 2013

- MAHNOB Laughter database

- MAHNOB MHI-Mimicry database

- Facial point annotations

- MMI Facial expression database

- SEMAINE database

- iBugMask: Face Parsing in the Wild (ImaVis 2021)

- iBUG Eye Segmentation Dataset

Code

- Valence/Arousal Online Annotation Tool

- The Menpo Project

- The Dynamic Ordinal Classification (DOC) Toolbox

- Gauss-Newton Deformable Part Models for Face Alignment in-the-Wild (CVPR 2014)

- Robust and Efficient Parametric Face/Object Alignment (2011)

- Discriminative Response Map Fitting (DRMF 2013)

- End-to-End Lipreading

- DS-GPLVM (TIP 2015)

- Subspace Learning from Image Gradient Orientations (2011)

- Discriminant Incoherent Component Analysis (IEEE-TIP 2016)

- AOMs Generic Face Alignment (2012)

- Fitting AAMs in-the-Wild (ICCV 2013)

- Salient Point Detector (2006/2008)

- Facial point detector (2010/2013)

- Chehra Face Tracker (CVPR 2014)

- Empirical Analysis Of Cascade Deformable Models For Multi-View Face Detection (IMAVIS 2015)

- Continuous-time Prediction of Dimensional Behavior/Affect

- Real-time Face tracking with CUDA (MMSys 2014)

- Facial Point detector (2005/2007)

- Facial tracker (2011)

- Salient Point Detector (2010)

- AU detector (TAUD 2011)

- Action Unit Detector (2016)

- AU detector (LAUD 2010)

- Smile Detectors

- Head Nod Shake Detector (2010/2011)

- Gesture Detector (2011)

- Head Nod Shake Detector and 5 Dimensional Emotion Predictor (2010/2011)

- Gesture Detector (2010)

- HCI^2 Framework

- FROG Facial Tracking Component

- SEMAINE Visual Components (2008/2009)

- SEMAINE Visual Components (2009/2010)

Aff-Wild2 database

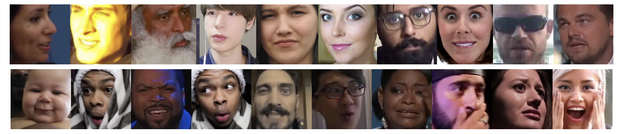

Frames of Aff-Wild2, showing subjects of different ethnicities, age groups, emotional states, head poses, illumination conditions and occlusions

Affective computing has been largely limited in terms of available data resources. The need to collect and annotate diverse in-the-wild datasets has become apparent with the rise of deep learning models, as the default approach to address any computer vision task.

Some in-the-wild databases have been recently proposed. However: i) their size is small, ii) they are not audiovisual, iii) only a small part is manually annotated, iv) they contain a small number of subjects, or v) they are not annotated for all main behavior tasks (valence arousal estimation, action unit detection and basic expression classification).

To address these, we substantially extend the largest available in-the-wild database (Aff-Wild) to study continuous emotions such as valence and arousal. Furthermore, we annotate parts of the database with basic expressions and action units. We call this database Aff-Wild2. To the best of our knowledge, AffWild2 is the only in-the-wild database containing annotations for all 3 main behavior tasks. The database is also a large scale one. It is also the first audiovisual database with annotations for AUs. All AU annotated databases do not contain audio, but only images or videos.

The Aff-Wild2 is annotated in a per frame basis for the seven basic expressions (i.e., happiness, surprise, anger, disgust, fear, sadness and the neutral state), twelve action units (AUs 1,2,4,6,7,10,12,15,23,24,25, 26) and valence and arousal. In total Aff-Wild2 consists of 564 videos of around 2.8M frames with 554 subjects (326 of which are male and 228 female). All videos have been annotated in terms of valence and arousal. 546 videos of around 2.6M frames have been annotated in terms of the basic expressions. 541 videos of around 2.6M frames have been annotated in terms of action units. Aff-Wild2 displayes a big diversity in terms of subjects' ages, ethnicities and nationalities; it has also great variations and diversities of environments.

How to acquire Aff-Wild2

If you are an academic, (i.e., a person with a permanent position at a research institute or university, e.g. a professor, but not a Post-Doc or a PhD/PG/UG student), please:

i) fill in this EULA;

ii) use your official academic email (as data cannot be released to personal emails);

iii) send an email to d.kollias@qmul.ac.uk with subject: Aff-Wild2 request by academic;

iv) include in the email the above signed EULA, the reason why you require access to the Aff-Wild2 database, and your official academic website

In the case of Post-Docs or Ph.D. students: your supervisor/advisor should perform the above described steps.

If you are from industry and you want to acquire Aff-Wild2, please send an email from your official industrial email to d.kollias@qmul.ac.uk with subject: Aff-Wild2 request from industry and explain the reason why the database access is needed; also specify if it is for research or commercial purposes.

If you are an undergraduate or postgraduate student (but not a Ph.D. student), please:

i) fill in this EULA; you must print and sign the EULA in ink; a wet signature is required; no electronic signature will be accepted

ii) use your official university email (data cannot be released to personal emails);

iii) send an email to d.kollias@qmul.ac.uk with subject: Aff-Wild2 request by student

iv) include in the email the above signed EULA and proof/verification of your current student status (eg student ID card).

To all UG/PG students:

1 ) The database cannot be shared to students for research internships; the supervisor should instead follow the steps outlined in the two above cases (industry or academia).

2) If the email does not contain all the required information or if the email contains incorrect information (e.g. non filled in EULA or non signed EULA or EULA not signed in ink or an incorrect EULA), then the database access request will be automatically rejected and there will be no reply to the request.

Due to the high volume of requests, please allow about 14 days for the reply to your request for access.

References

If you use the above data, you must cite all following papers (and the white paper):

- D. Kollias, et. al.: "Advancements in Affective and Behavior Analysis: The 8th ABAW Workshop and Competition", 2024

@article{Kollias2025, author = "Dimitrios Kollias and Panagiotis Tzirakis and Alan S. Cowen and Stefanos Zafeiriou and Irene Kotsia and Eric Granger and Marco Pedersoli and Simon L. Bacon and Alice Baird and Chris Gagne and Chunchang Shao and Guanyu Hu and Soufiane Belharbi and Muhammad Haseeb Aslam", title = "{Advancements in Affective and Behavior Analysis: The 8th ABAW Workshop and Competition}", year = "2025", month = "3", doi = "10.6084/m9.figshare.28524563.v4"}

@article{kolliasadvancements, title={Advancements in Affective and Behavior Analysis: The 8th ABAW Workshop and Competition}, author={Kollias, Dimitrios and Tzirakis, Panagiotis and Cowen, Alan and Kotsia, Irene and Cogitat, UK and Granger, Eric and Pedersoli, Marco and Bacon, Simon and Baird, Alice and Shao, Chunchang and others}}

- D. Kollias, et. al.: "7th abaw competition: Multi-task learning and compound expression recognition", 2024

@article{kollias20247th,title={7th abaw competition: Multi-task learning and compound expression recognition},author={Kollias, Dimitrios and Zafeiriou, Stefanos and Kotsia, Irene and Dhall, Abhinav and Ghosh, Shreya and Shao, Chunchang and Hu, Guanyu},journal={arXiv preprint arXiv:2407.03835},year={2024}}

- D. Kollias, et. al.: "The 6th Affective Behavior Analysis in-the-wild (ABAW) Competition". IEEE CVPR, 2024

@inproceedings{kollias20246th,title={The 6th affective behavior analysis in-the-wild (abaw) competition},author={Kollias, Dimitrios and Tzirakis, Panagiotis and Cowen, Alan and Zafeiriou, Stefanos and Kotsia, Irene and Baird, Alice and Gagne, Chris and Shao, Chunchang and Hu, Guanyu},booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},pages={4587--4598},year={2024}}

- D. Kollias, et. al.: "Distribution matching for multi-task learning of classification tasks: a large-scale study on faces & beyond". AAAI, 2024

@inproceedings{kollias2024distribution,title={Distribution matching for multi-task learning of classification tasks: a large-scale study on faces \& beyond},author={Kollias, Dimitrios and Sharmanska, Viktoriia and Zafeiriou, Stefanos},booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},volume={38},number={3},pages={2813--2821},year={2024}}

- D. Kollias, et. al.: "ABAW: Valence-Arousal Estimation, Expression Recognition, Action Unit Detection & Emotional Reaction Intensity Estimation Challenges". IEEE CVPR, 2023

@inproceedings{kollias2023abaw2, title={Abaw: Valence-arousal estimation, expression recognition, action unit detection \& emotional reaction intensity estimation challenges}, author={Kollias, Dimitrios and Tzirakis, Panagiotis and Baird, Alice and Cowen, Alan and Zafeiriou, Stefanos}, booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, pages={5888--5897}, year={2023}}

- D. Kollias: "ABAW: Learning from Synthetic Data & Multi-Task Learning Challenges". ECCV, 2022

@inproceedings{kollias2023abaw, title={ABAW: learning from synthetic data \& multi-task learning challenges}, author={Kollias, Dimitrios}, booktitle={European Conference on Computer Vision}, pages={157--172}, year={2023}, organization={Springer}}

- D. Kollias: "ABAW: Valence-Arousal Estimation, Expression Recognition, Action Unit Detection & Multi-Task Learning Challenges", IEEE CVPR, 2022

@inproceedings{kollias2022abaw, title={Abaw: Valence-arousal estimation, expression recognition, action unit detection \& multi-task learning challenges}, author={Kollias, Dimitrios}, booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, pages={2328--2336}, year={2022}}

- D. Kollias, et. al.: "Analysing Affective Behavior in the second ABAW2 Competition". ICCV, 2021

@inproceedings{kollias2021analysing, title={Analysing affective behavior in the second abaw2 competition}, author={Kollias, Dimitrios and Zafeiriou, Stefanos}, booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision}, pages={3652--3660}, year={2021}}

- D. Kollias, et. al.: "Distribution Matching for Heterogeneous Multi-Task Learning: a Large-scale Face Study", 2021

@article{kollias2021distribution, title={Distribution Matching for Heterogeneous Multi-Task Learning: a Large-scale Face Study}, author={Kollias, Dimitrios and Sharmanska, Viktoriia and Zafeiriou, Stefanos}, journal={arXiv preprint arXiv:2105.03790}, year={2021} }

- D. Kollias,S. Zafeiriou: "Affect Analysis in-the-wild: Valence-Arousal, Expressions, Action Units and a Unified Framework, 2021

@article{kollias2021affect, title={Affect Analysis in-the-wild: Valence-Arousal, Expressions, Action Units and a Unified Framework}, author={Kollias, Dimitrios and Zafeiriou, Stefanos}, journal={arXiv preprint arXiv:2103.15792}, year={2021}}

- D. Kollias, et. al.: "Analysing Affective Behavior in the First ABAW 2020 Competition". IEEE FG, 2020

@inproceedings{kollias2020analysing, title={Analysing Affective Behavior in the First ABAW 2020 Competition}, author={Kollias, D and Schulc, A and Hajiyev, E and Zafeiriou, S}, booktitle={2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020)(FG)}, pages={794--800}}

- D. Kollias, S. Zafeiriou: "Expression, Affect, Action Unit Recognition: Aff-Wild2, Multi-Task Learning and ArcFace". BMVC, 2019

@article{kollias2019expression, title={Expression, Affect, Action Unit Recognition: Aff-Wild2, Multi-Task Learning and ArcFace}, author={Kollias, Dimitrios and Zafeiriou, Stefanos}, journal={arXiv preprint arXiv:1910.04855}, year={2019}}

- D. Kollias, et at.: "Face Behavior a la carte: Expressions, Affect and Action Units in a Single Network", 2019

@article{kollias2019face,title={Face Behavior a la carte: Expressions, Affect and Action Units in a Single Network}, author={Kollias, Dimitrios and Sharmanska, Viktoriia and Zafeiriou, Stefanos}, journal={arXiv preprint arXiv:1910.11111}, year={2019}}

- D. Kollias, et. al.: "Deep Affect Prediction in-the-wild: Aff-Wild Database and Challenge, Deep Architectures, and Beyond". International Journal of Computer Vision (IJCV), 2019

@article{kollias2019deep, title={Deep affect prediction in-the-wild: Aff-wild database and challenge, deep architectures, and beyond}, author={Kollias, Dimitrios and Tzirakis, Panagiotis and Nicolaou, Mihalis A and Papaioannou, Athanasios and Zhao, Guoying and Schuller, Bj{\"o}rn and Kotsia, Irene and Zafeiriou, Stefanos}, journal={International Journal of Computer Vision}, pages={1--23}, year={2019}, publisher={Springer}}

- S. Zafeiriou, et. al. "Aff-Wild: Valence and Arousal in-the-wild Challenge", CVPR, 2017

@inproceedings{zafeiriou2017aff, title={Aff-wild: Valence and arousal ‘in-the-wild’challenge}, author={Zafeiriou, Stefanos and Kollias, Dimitrios and Nicolaou, Mihalis A and Papaioannou, Athanasios and Zhao, Guoying and Kotsia, Irene}, booktitle={Computer Vision and Pattern Recognition Workshops (CVPRW), 2017 IEEE Conference on}, pages={1980--1987}, year={2017}, organization={IEEE} }

Evaluation of your predictions on the test set

First of all, you should clarify to which set (VA, AU, Expression) the predictions correspond. The format of the predictions should follow the (same) format of the annotation files that we provide. In detail:

In the VA case: Send the files with names as the corresponding videos. Each line of each file should contain the values of valence and arousal for the corresponding frame separated by comma ,i.e. for file 271.csv:

line 1 should be: valence,arousal

line 2 should be: valence_of_first_frame,arousal_of_first_frame (for instance it could be: 0.53,0.28)

line 3 should be: valence_of_second_frame,arousal_of_second_frame

...

last line: valence_of_last_frame,arousal_of_last_frame

In the Expression case: Send the files with names as the corresponding videos. Each line of each file should contain the corresponding basic expression prediction (0,1,2,3,4,5,6, where: 0 denotes neutral, 1 denotes anger, 2 denotes disgust, 3 denotes fear, 4 denotes happiness, 5 denotes sadness and 6 denotes surprise). For instance for file 282.csv:

first line should be: Neutral,Anger,Disgust,Fear,Happiness,Sadness,Surprise

second line should be: basic_expression_prediction_of_first_frame (such as 5)

...

last line should be: basic_expression_prediction_of_last_frame

In the AU case: Send the files with names as the corresponding videos. Each line of each file should contain 8 numbers (0 or 1) comma separated, that correspond to the 8 Action Units (AU1, AU2, AU4, AU6, AU12, AU15, AU20, AU25). For instance for file video18.csv:

first line should be: AU1,AU2,AU4,AU6,AU12,AU15,AU20,AU25

second line should be: AU1_of_first_frame,AU2_of_first_frame,AU4_of_first_frame,AU6_of_first_frame,AU12_of_first_frame,AU15_of_first_frame,AU20_of_first_frame,AU25_of_first_frame (such as: 0,1,1,0,0,0,0,1)

...

last line should be: AU1_of_last_frame,AU2_of_last_frame,AU4_of_last_frame,AU6_of_last_frame,AU12_of_last_frame,AU15_of_last_frame,AU20_of_last_frame,AU25_of_last_frame

Note that in your files you should include predictions for all frames in the video (irregardless if the bounding box failed or not).

Important Information:

- All the training/validation/testing images of the dataset are obtained from Youtube. We are not responsible for the content nor the meaning of these images.

- You agree not to reproduce, duplicate, copy, sell, trade, resell or exploit for any commercial purposes, any portion of the images and any portion of derived data.

- You agree not to further copy, publish or distribute any portion of annotations of the dataset. Except, for internal use at a single site within the same organization it is allowed to make copies of the dataset.

- We reserve the right to terminate your access to the dataset at any time.