Datasets

- CVPR 2023: 5th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- ECCV 2022: 4th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- CVPR 2022: 3rd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- ICCV 2021: 2nd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- Synthesizing Coupled 3D Face Modalities by TBGAN

- Face Bio-metrics under COVID (Masked Face Recognition Challenge & Workshop ICCV 2021)

- First Affect-in-the-Wild Challenge

- FG-2020 Competition: Affective Behavior Analysis in-the-wild (ABAW)

- Aff-Wild2 database

- First Faces in-the-wild Workshop-Challenge

- In-The-Wild 3D Morphable Models: Code and Data

- Sound of Pixels

- Lightweight Face Recognition Challenge & Workshop (ICCV 2019)

- Audiovisual Database of Normal-Whispered-Silent Speech

- Deformable Models of Ears in-the-wild for Alignment and Recognition

- 300 Videos in the Wild (300-VW) Challenge & Workshop (ICCV 2015)

- 1st 3D Face Tracking in-the-wild Competition

- The Fabrics Dataset

- The Mobiface Dataset

- Large Scale Facial Model (LSFM)

- AgeDB

- AFEW-VA Database for Valence and Arousal Estimation In-The-Wild

- The CONFER Database

- Special Issue on Behavior Analysis "in-the-wild"

- Body Pose Annotations Correction (CVPR 2016)

- KANFace

- MeDigital

- FG-2020 Workshop "Affect Recognition in-the-wild: Uni/Multi-Modal Analysis & VA-AU-Expression Challenges"

- 4DFAB: A Large Scale 4D Face Database for Expression Analysis and Biometric Applications

- Affect "in-the-wild" Workshop

- 2nd Facial Landmark Localisation Competition - The Menpo BenchMark

- Facial Expression Recognition and Analysis Challenge 2015

- The SEWA Database

- 300 Faces In-The-Wild Challenge (300-W), IMAVIS 2014

- Mimic Me

- MAHNOB-HCI-Tagging database

- 300 Faces In-the-Wild Challenge (300-W), ICCV 2013

- MAHNOB Laughter database

- MAHNOB MHI-Mimicry database

- Facial point annotations

- MMI Facial expression database

- SEMAINE database

- iBugMask: Face Parsing in the Wild (ImaVis 2021)

- iBUG Eye Segmentation Dataset

Code

- Valence/Arousal Online Annotation Tool

- The Menpo Project

- The Dynamic Ordinal Classification (DOC) Toolbox

- Gauss-Newton Deformable Part Models for Face Alignment in-the-Wild (CVPR 2014)

- Robust and Efficient Parametric Face/Object Alignment (2011)

- Discriminative Response Map Fitting (DRMF 2013)

- End-to-End Lipreading

- DS-GPLVM (TIP 2015)

- Subspace Learning from Image Gradient Orientations (2011)

- Discriminant Incoherent Component Analysis (IEEE-TIP 2016)

- AOMs Generic Face Alignment (2012)

- Fitting AAMs in-the-Wild (ICCV 2013)

- Salient Point Detector (2006/2008)

- Facial point detector (2010/2013)

- Chehra Face Tracker (CVPR 2014)

- Empirical Analysis Of Cascade Deformable Models For Multi-View Face Detection (IMAVIS 2015)

- Continuous-time Prediction of Dimensional Behavior/Affect

- Real-time Face tracking with CUDA (MMSys 2014)

- Facial Point detector (2005/2007)

- Facial tracker (2011)

- Salient Point Detector (2010)

- AU detector (TAUD 2011)

- Action Unit Detector (2016)

- AU detector (LAUD 2010)

- Smile Detectors

- Head Nod Shake Detector (2010/2011)

- Gesture Detector (2011)

- Head Nod Shake Detector and 5 Dimensional Emotion Predictor (2010/2011)

- Gesture Detector (2010)

- HCI^2 Framework

- FROG Facial Tracking Component

- SEMAINE Visual Components (2008/2009)

- SEMAINE Visual Components (2009/2010)

Sound of Pixels

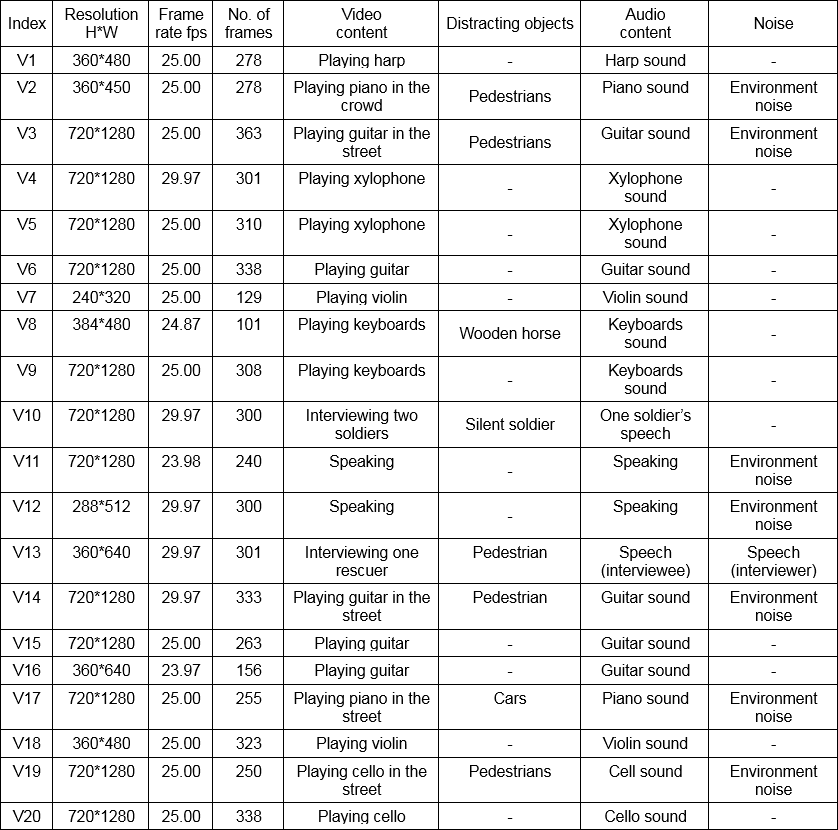

Blind Audio-Visual Localization (BAVL) Dataset consists of 20 audio-visual recordings of sound sources, which could be talking faces or music instruments. Most audio-visual recordings (19) are videos from Youtube except V8, which is from [1]. Besides, the video V7 was also used in[2][3], and V16 used in [3]. All 20 videos are annotated by ourselves in a uniform manner. Details of the video sequences are listed in Table 1.

The videos in the dataset have average duration of 10 seconds, and they are all recorded by one camera and one microphone. The audio files (.wav) was sampled at a 16 kHz for V7, V8, V16, and 44.1 kHz for the rest. The video frames contain the sound-making object (sound source) and distracting objects (e.g. pedestrian on the street), while the audio signals consists of the sound produced by the sound source (human speech or instrumental music), environmental noise and sometimes other sounds. The distracting objects and other irrelevant noise/sounds do not exist in all videos. The primary usage of the dataset is to evaluate the performance of sound source localization method, in the presence of distracting motions and noise.

Table 1. Main Specifications and Contents of the Video Sequences.

Annotation

We provide visual annotations as illustrated in Figure 1. The locations of sound-making objects are annotated in the white region. The images of annotation have binary values, where 1 indicates the existence of sound source.

Figure 1. An Example of Annotation for V8.

Data Download

Content: 20 videos of the dataset, in the form of image frames and wav audio files, plus annotations.

Format: zip archive containing jpg and wav files.

Size: 395 MB

Download Link: BAVL_Database.zip

If you use this, please cite:

@inproceedings{pu2017audio,

title={Audio-visual object localization and separation using low-rank and sparsity},

author={Pu, Jie and Panagakis, Yannis and Petridis, Stavros and Pantic, Maja},

booktitle={Acoustics, Speech and Signal Processing (ICASSP), 2017 IEEE International Conference on},

pages={2901--2905},

year={2017},

organization={IEEE}

}

Acknowledgements

This work has been funded by the European Community Horizon 2020 under grant agreement no. 645094 (SEWA) and no. 688835 (DE-ENIGMA).

References

[1] Kidron, Einat, Yoav Y. Schechner, and Michael Elad. "Pixels that sound."Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on. Vol. 1. IEEE, 2005.

[2] Izadinia, Hamid, Imran Saleemi, and Mubarak Shah. "Multimodal analysis for identification and segmentation of moving-sounding objects."IEEE Transactions on Multimedia 15.2 (2013): 378-390.

[3] Li, Kai, Jun Ye, and Kien A. Hua. "What's making that sound?."Proceedings of the 22nd ACM international conference on Multimedia. ACM, 2014.