Datasets

- CVPR 2023: 5th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- ECCV 2022: 4th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- CVPR 2022: 3rd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- ICCV 2021: 2nd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

- Synthesizing Coupled 3D Face Modalities by TBGAN

- Face Bio-metrics under COVID (Masked Face Recognition Challenge & Workshop ICCV 2021)

- First Affect-in-the-Wild Challenge

- FG-2020 Competition: Affective Behavior Analysis in-the-wild (ABAW)

- Aff-Wild2 database

- First Faces in-the-wild Workshop-Challenge

- In-The-Wild 3D Morphable Models: Code and Data

- Sound of Pixels

- Lightweight Face Recognition Challenge & Workshop (ICCV 2019)

- Audiovisual Database of Normal-Whispered-Silent Speech

- Deformable Models of Ears in-the-wild for Alignment and Recognition

- 300 Videos in the Wild (300-VW) Challenge & Workshop (ICCV 2015)

- 1st 3D Face Tracking in-the-wild Competition

- The Fabrics Dataset

- The Mobiface Dataset

- Large Scale Facial Model (LSFM)

- AgeDB

- AFEW-VA Database for Valence and Arousal Estimation In-The-Wild

- The CONFER Database

- Special Issue on Behavior Analysis "in-the-wild"

- Body Pose Annotations Correction (CVPR 2016)

- KANFace

- MeDigital

- FG-2020 Workshop "Affect Recognition in-the-wild: Uni/Multi-Modal Analysis & VA-AU-Expression Challenges"

- 4DFAB: A Large Scale 4D Face Database for Expression Analysis and Biometric Applications

- Affect "in-the-wild" Workshop

- 2nd Facial Landmark Localisation Competition - The Menpo BenchMark

- Facial Expression Recognition and Analysis Challenge 2015

- The SEWA Database

- 300 Faces In-The-Wild Challenge (300-W), IMAVIS 2014

- Mimic Me

- MAHNOB-HCI-Tagging database

- 300 Faces In-the-Wild Challenge (300-W), ICCV 2013

- MAHNOB Laughter database

- MAHNOB MHI-Mimicry database

- Facial point annotations

- MMI Facial expression database

- SEMAINE database

- iBugMask: Face Parsing in the Wild (ImaVis 2021)

- iBUG Eye Segmentation Dataset

Code

- Valence/Arousal Online Annotation Tool

- The Menpo Project

- The Dynamic Ordinal Classification (DOC) Toolbox

- Gauss-Newton Deformable Part Models for Face Alignment in-the-Wild (CVPR 2014)

- Robust and Efficient Parametric Face/Object Alignment (2011)

- Discriminative Response Map Fitting (DRMF 2013)

- End-to-End Lipreading

- DS-GPLVM (TIP 2015)

- Subspace Learning from Image Gradient Orientations (2011)

- Discriminant Incoherent Component Analysis (IEEE-TIP 2016)

- AOMs Generic Face Alignment (2012)

- Fitting AAMs in-the-Wild (ICCV 2013)

- Salient Point Detector (2006/2008)

- Facial point detector (2010/2013)

- Chehra Face Tracker (CVPR 2014)

- Empirical Analysis Of Cascade Deformable Models For Multi-View Face Detection (IMAVIS 2015)

- Continuous-time Prediction of Dimensional Behavior/Affect

- Real-time Face tracking with CUDA (MMSys 2014)

- Facial Point detector (2005/2007)

- Facial tracker (2011)

- Salient Point Detector (2010)

- AU detector (TAUD 2011)

- Action Unit Detector (2016)

- AU detector (LAUD 2010)

- Smile Detectors

- Head Nod Shake Detector (2010/2011)

- Gesture Detector (2011)

- Head Nod Shake Detector and 5 Dimensional Emotion Predictor (2010/2011)

- Gesture Detector (2010)

- HCI^2 Framework

- FROG Facial Tracking Component

- SEMAINE Visual Components (2008/2009)

- SEMAINE Visual Components (2009/2010)

1st 3D Face Tracking in-the-wild Competition

Latest News

- For any requests, e.g. regarding the zipped files, please send your requests to the competition e-mail (mentioned as 'Workshop Administrator' towards the end of this page).

- The 3D Menpo Challenge testset has been released. The test data are now available here. If you want to participate in the competition you need to send us the results by the 10th of August.

- If you use the above data please cite the following papers:

-

S. Zafeiriou, E. Ververas, G. Chrysos, G. Trigeorgis, J. Deng, A. Roussos. "The 3D Menpo Facial Landmark Tracking Challenge", ICCVW, 2017.

James Booth, et al. "3D Face Morphable Models" In-the-Wild", CVPR, 2017.

-

Submission of papers is performed through cmt .

3D MENPO DATA

The 3D Menpo Facial Landmark Tracking in-the-Wild Challenge & Workshop to be held in conjunction with International Conference on Computer Vision (ICCV) 2017, Venice, Italy.

Organisers

Chairs:

Stefanos Zafeiriou, Imperial College London, UK s.zafeiriou@imperial.ac.uk

Jiakang Deng, Imperial College London, UK j.deng16@imperial.ac.uk

Anastasios Roussos, Imperial College London, UK troussos@imperial.ac.uk

Epameinondas Antonakos, Imperial College London, UK e.antonakos@imperial.ac.uk

Data Chairs:

Grigorios Chrysos, Imperial College London, UK g.chrysos@imperial.ac.uk

George Trigeorgis Imperial College London, UK george.trigeorgis08@imperial.ac.uk

Evangelos Ververas Imperial College London, UK e.ververas16@imperial.ac.uk

Scope

Even though a considerable amount of high-quality annotated data have been collected for benchmarking efforts regarding facial landmark localization and tracking [1,2,3], to the best of our knowledge there exists no benchmark for 3D facial landmark tracking in long “in-the-wild” videos. This can be attributed to the difficulty of (a) capturing 3D faces in unconstrained conditions and (b) reliably fitting 3D models in images [4] (until recently). In ICCV’17, using our recent breakthroughs for fitting large-scale 3D Morphable Models [4] on in-the-wild images and videos we propose to make a significant step in advancing the state of the art in the field by organising the first challenge on 3D facial landmark localization in videos recorded in the wild and providing the first comprehensive benchmark for the task. The challenge will represent the very first thorough quantitative evaluation on the topic. Furthermore, the competition will explore how far we are from attaining satisfactory 3D facial landmark tracking results in various scenarios.

The 3D MENPO Challenge

In order to develop a comprehensive benchmark for evaluating 3D facial landmark localisation

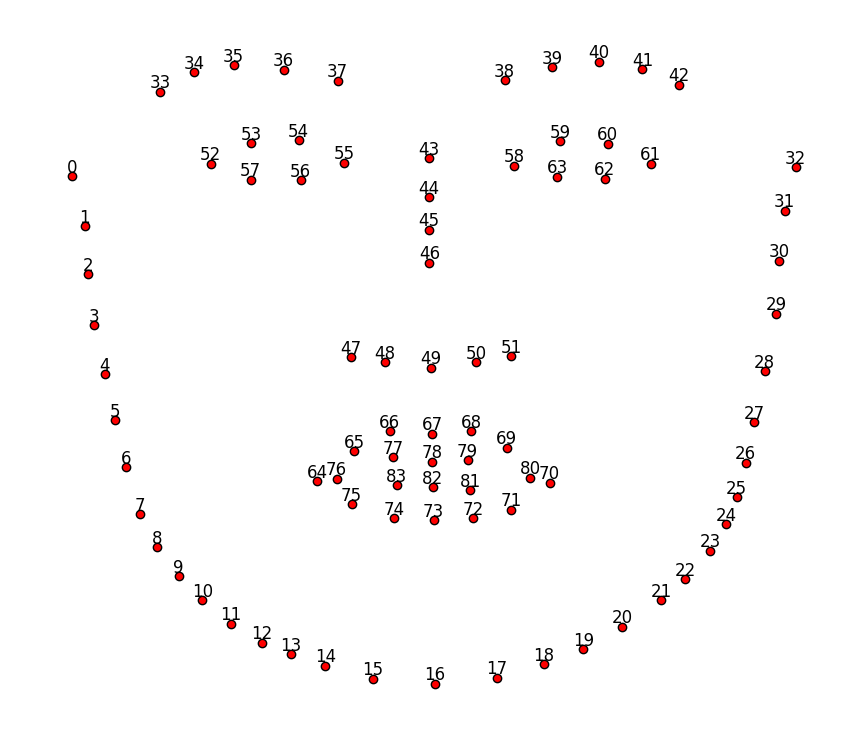

algorithms in the wild in arbitrary poses, we have fitted with state-of-the-art 3D facial morphable models [4] all the images provided by the 300 W and Menpo challenges. The parameters of the model have been carefully selected and all fittings have been visually inspected. The final landmarks have been manually corrected (if needed). We found that for better 3D face landmark localisation more landmarks are required in the boundary, hence we provide 16 more landmarks. The above data can be used for training models. In summary, for static images we provide (a) the x,y coordinates in the image space that correspond to the projections of a 3D model of the face and (b) x,y,z coordinates of the landmarks in the model space. In total, we provide 84 landmarks. The data are available here: 3D Menpo static. Please see Fig. 1 for the markup and 2 to 8 for some examples

Fig. 1: The 84 points mark-up used for our annotations in near-frontal faces.

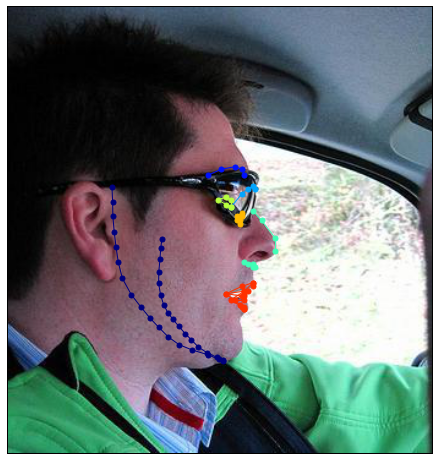

Fig. 2: Example landmarks in a profile face.

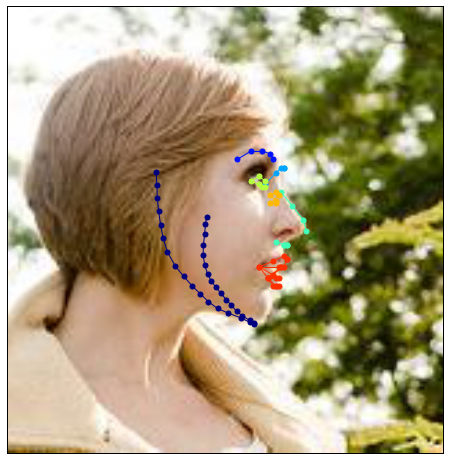

Fig. 3: Example landmarks in a profile face.

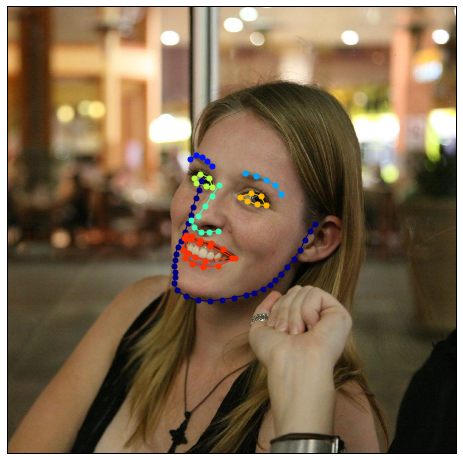

Fig. 3: Example landmarks in a semi-frontal face.

Fig. 4: Example landmarks in a semi-frontal face.

Furthermore, we provide 3D facial landmarks for all the videos of 300 VW competition. The landmarks in this case have been produced in a more sophisticated way. In short, a non-rigid structure from motion algorithm was used on the original 2D landmarks of the 300 VW competition to get a first estimate of the 3D coordinates. Afterwards, a modified version of [4] was used that fits all the frames of the video simultaneously. Finally, all landmarks have been visually inspected and manually corrected if needed. An example video can be found here.

Training

The training facial samples and annotations are available to download from 3D Menpo Tracking.

The training data contain the facial images and their corresponding annotation (.ljson file).

- The dataset is available for non-commercial research purposes only.

- All the training images of the dataset are obtained from the FDDB, AFLW, as well as from YouTube (under creative commons license) databases (please cite the corresponding papers when you are using them). We are not responsible for the content nor the meaning of these images.

- You agree not to reproduce, duplicate, copy, sell, trade, resell or exploit for any commercial purposes, any portion of the annotations and any portion of derived data.

- You agree not to further copy, publish or distribute any portion of annotations of the dataset. Except, for internal use at a single site within the same organization it is allowed to make copies of the dataset.

- We reserve the right to terminate your access to the dataset at any time.

Testing

Participants will have their algorithms tested on other facial in-the-wild videos which will be provided in a predefined date (see below). This dataset aims at testing the ability of current systems for fitting unseen subjects, independently of variations in pose, expression, illumination, background, occlusion, and image quality.

The test data are available here.

A winner for the competition will be announced. Participants do not need to submit the executable to the organisers but only the results on the test images. The participants can take part in one or more of the aforementioned, (near) frontal or profile challenges. The 3D Menpo Challenge organisers will not take part in the competition. The test set images are similar in nature to those of 3D Menpo training set.

Performance Assessment

Fitting performance will be assessed on the same mark-up provided for the training using well-known error

measures. In particular, we will compute both a) the typical Euclidean point-to-point error [1,2] (sample matlab code for calculating the error can be downloaded here), and b) the error in the model space (which is counted in millimeter units). The error will be calculated over (a) all landmarks, and (b) the facial feature landmarks (eyebrows, eyes, nose, and mouth). The cumulative curve corresponding to the percentage of test images for which the error was less than a specific value will be produced. Finally, these results will then be returned to the participants for inclusion in their papers.

The authors acknowledge that if they decide to submit, the resulting curve might be used by the organisers in any related visualisations/results. The authors are prohibited from sharing the results with other contesting teams.

The organisers cannot publish the resulting ljson (similar to pts format) of the participants without their consent. Only one final submission per team will be accepted for each team to avoid overfitting the testset. This also means that the participants should use their own validation set, should they wish to test their algorithms and not try to submit multiple times. Each image contains a single face annotated and it is the one that is closer to the centre of the image.

3D Faces Tracking in-the-wild Challenge/Workshop

Our aim is to accept up to 10 papers to be orally presented at the workshop.

Submission Information:

Challenge participants should submit a paper to the 3D Menpo challenge, which summarizes the methodology and the achieved performance of their algorithm. Submissions should adhere to the main ICCV 2017 proceedings style. The workshop papers will be published in the ICCV 2017 proceedings. Please sign up in the submission system (will be ready by end of this week) to submit your paper.

Important Dates:

- 17 June: Announcement of the Challenges and Training data available (20 of June)

- 1 August: Release of the test videos

- 10 August: Deadline of returning results

- 11 August: Results returned to the authors

- 16 August: Deadline for paper submission

- 20 August Decisions

- 25 August: Submission of the camera ready

Contact:

Workshop Administrator: menpo.3d.challenge@gmail.com

References

[1] C. Sagonas, G. Tzimiropoulos, S. Zafeiriou, & M. Pantic, (2013, December). 300 faces in-the-wild

challenge: The first facial landmark localization challenge. In Computer Vision Workshops (ICCVW), 2013

IEEE International Conference on (pp. 397-403).

[2] J. Shen, S. Zafeiriou, G.G. Chrysos, J. Kossaifi, G. Tzimiropoulos & M. Pantic (2015, December). The first facial landmark tracking in-the-wild challenge: Benchmark and results. In Computer Vision Workshop (ICCVW), 2015 IEEE International Conference on (pp. 1003-1011). IEEE.

[3] S. Zafeiriou, G. Trigeorgis, G. Chrysos, J. Deng and J. Shen. "The Menpo Facial Landmark Localisation Challenge: A step closer to the solution.", CVPRW, 2017.

[4] J. Booth, et al. "3D Face Morphable Models" In-the-Wild", CVPR, 2017.

Sponsors:

The 3D Faces "in-the-wild" Tracking Challenge has been supported by EPSRC project FACER2VM and a Google Faculty Award.