Affect-sensitive HCI

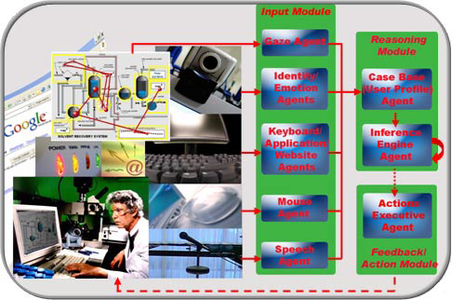

We developed a system, named Gaze-X, to support affective multimodal human-computer interaction (AMM-HCI) where the user’s actions and emotions are modeled and then used to adapt the interaction and support the user in his or her activity.

The system is based on sensing and interpretation of the human part of the computer’s context defined as W5+ (who, where, what, when, why, how). It integrates a number of natural human communicative modalities including speech, eye gaze direction, face and facial expression, and a number of standard HCI modalities like keystrokes, mouse movements, and active software identification, which, in turn, are fed into processes that provide decision making and adapt the HCI to support the user in his or her activity according to his or her preferences. A usability study conducted in an office scenario with a number of users indicates that Gaze-X is perceived as effective, easy to use, useful, and affectively qualitative.

Involved group members

Maja Pantic, Jie Shen, Joan Alabort-i-Medina, Konstantinos Bousmalis, Dimitrios Kollias, Ognjen Rudovic, Yue Zhang, Chunliang Hao, Yiming Lin, Yiming Lin, Yiming Lin

Related Publications

-

HCI^2 Workbench: A Development Tool for Multimodal Human-Computer Interaction Systems

J. Shen, W. Shi, M. Pantic. Proceedings of IEEE International Conference on Automatic Face and Gesture Recognition (FG'11), ECAG'11- 2nd FG Workshop on Facial and Bodily Expressions for Control and Adaptation of Games . Santa Barbara, CA, USA, pp. 766 - 773, March 2011.

Bibtex reference [hide]@inproceedings{shen2011hci,

author = {J. Shen and W. Shi and M. Pantic},

pages = {766--773},

address = {Santa Barbara, CA, USA},

booktitle = {Proceedings of IEEE International Conference on Automatic Face and Gesture Recognition (FG'11), ECAG'11- 2nd FG Workshop on Facial and Bodily Expressions for Control and Adaptation of Games },

month = {March},

title = {HCI^2 Workbench: A Development Tool for Multimodal Human-Computer Interaction Systems},

year = {2011},

}Endnote reference [hide]%0 Conference Proceedings

%T HCI^2 Workbench: A Development Tool for Multimodal Human-Computer Interaction Systems

%A Shen, J.

%A Shi, W.

%A Pantic, M.

%B Proceedings of IEEE International Conference on Automatic Face and Gesture Recognition (FG?11), ECAG?11- 2nd FG Workshop on Facial and Bodily Expressions for Control and Adaptation of Games

%D 2011

%8 March

%C Santa Barbara, CA, USA

%F shen2011hci

%P 766-773 -

A Software Framework for Multimodal Human-Computer Interaction Systems

J. Shen, M. Pantic. Proceedings of IEEE Int'l Conf. Systems, Man, and Cybernetics (SMC'09). San Antonio, USA, pp. 2038 - 2045, October 2009.

Bibtex reference [hide]@inproceedings{Shen2009asffm,

author = {J. Shen and M. Pantic},

pages = {2038--2045},

address = {San Antonio, USA},

booktitle = {Proceedings of IEEE Int'l Conf. Systems, Man, and Cybernetics (SMC'09)},

month = {October},

title = {A Software Framework for Multimodal Human-Computer Interaction Systems},

year = {2009},

}Endnote reference [hide]%0 Conference Proceedings

%T A Software Framework for Multimodal Human-Computer Interaction Systems

%A Shen, J.

%A Pantic, M.

%B Proceedings of IEEE Int?l Conf. Systems, Man, and Cybernetics (SMC?09)

%D 2009

%8 October

%C San Antonio, USA

%F Shen2009asffm

%P 2038-2045 -

Human-Centred Intelligent Human-Computer Interaction (HCI2): How far are we from attaining it?

M. Pantic, A. Nijholt, A. Pentland, T. Huang. Int'l Journal of Autonomous and Adaptive Communications Systems. 1(2): pp. 168 - 187, 2008.

Bibtex reference [hide]@article{Pantic2008hihi,

author = {M. Pantic and A. Nijholt and A. Pentland and T. Huang},

pages = {168--187},

journal = {Int'l Journal of Autonomous and Adaptive Communications Systems},

number = {2},

title = {Human-Centred Intelligent Human-Computer Interaction (HCI2): How far are we from attaining it?},

volume = {1},

year = {2008},

}Endnote reference [hide]%0 Journal Article

%T Human-Centred Intelligent Human-Computer Interaction (HCI2): How far are we from attaining it?

%A Pantic, M.

%A Nijholt, A.

%A Pentland, A.

%A Huang, T.

%J Int?l Journal of Autonomous and Adaptive Communications Systems

%D 2008

%V 1

%N 2

%F Pantic2008hihi

%P 168-187 -

Emotionally aware automated portrait painting

S. Colton, M. F. Valstar, M. Pantic. Proceedings of ACM Int'l Conf. Digital Interactive Media in Entertainment and Arts (DIMEA'08). Athens, Greece, pp. 304 - 311, September 2008.

Bibtex reference [hide]@inproceedings{Colton2008eaapp,

author = {S. Colton and M. F. Valstar and M. Pantic},

pages = {304--311},

address = {Athens, Greece},

booktitle = {Proceedings of ACM Int'l Conf. Digital Interactive Media in Entertainment and Arts (DIMEA'08)},

month = {September},

title = {Emotionally aware automated portrait painting},

year = {2008},

}Endnote reference [hide]%0 Conference Proceedings

%T Emotionally aware automated portrait painting

%A Colton, S.

%A Valstar, M. F.

%A Pantic, M.

%B Proceedings of ACM Int?l Conf. Digital Interactive Media in Entertainment and Arts (DIMEA?08)

%D 2008

%8 September

%C Athens, Greece

%F Colton2008eaapp

%P 304-311 -

Do people emote while engaged in HCI office scenarios?

M. Santos, M. Pantic. Proceedings of Int'l Conf. Interfaces and Human Computer Interaction (IHCI'08). Amsterdam, Netherlands, pp. 101 - 108, July 2008.

Bibtex reference [hide]@inproceedings{Santos2008dpewe,

author = {M. Santos and M. Pantic},

pages = {101--108},

address = {Amsterdam, Netherlands},

booktitle = {Proceedings of Int'l Conf. Interfaces and Human Computer Interaction (IHCI'08)},

month = {July},

title = {Do people emote while engaged in HCI office scenarios?},

year = {2008},

}Endnote reference [hide]%0 Conference Proceedings

%T Do people emote while engaged in HCI office scenarios?

%A Santos, M.

%A Pantic, M.

%B Proceedings of Int?l Conf. Interfaces and Human Computer Interaction (IHCI?08)

%D 2008

%8 July

%C Amsterdam, Netherlands

%F Santos2008dpewe

%P 101-108 -

Gaze-X: Adaptive, Affective, Multimodal Interface for Single-User Office Scenarios

L. Maat, M. Pantic. editors: T. Huang, A. Nijholt, M. Pantic, A. Pentland. Lecture Notes in Artificial Intelligence, Special Volume on Artificial Intelligence for Human Computing. vol. 4451, pp. 251 - 271, 2007.

Bibtex reference [hide]@inbook{Maat2007gaami,

author = {L. Maat and M. Pantic},

pages = {251--271},

booktitle = {Lecture Notes in Artificial Intelligence, Special Volume on Artificial Intelligence for Human Computing},

editor = {T. Huang and A. Nijholt and A. Pentland and M. Pantic},

title = {Gaze-X: Adaptive, Affective, Multimodal Interface for Single-User Office Scenarios},

volume = {4451},

year = {2007},

}Endnote reference [hide]%0 Book Section

%A Maat, L.

%A Pantic, M.

%E Huang, T.

%E Nijholt, A.

%E Pentland, A.

%E Pantic, M.

%B Lecture Notes in Artificial Intelligence, Special Volume on Artificial Intelligence for Human Computing: Adaptive, Affective, Multimodal Interface for Single-User Office Scenarios

%D 2007

%V 4451

%F Maat2007gaami

%P 251-271 -

Gaze-X: Adaptive affective multimodal interface for single-user office scenarios

L. Maat, M. Pantic. Proceedings of ACM Int'l Conf. Multimodal Interfaces (ICMI'06). Banff, Canada, pp. 171 - 178, November 2006.

Bibtex reference [hide]@inproceedings{Maat2006gaami,

author = {L. Maat and M. Pantic},

pages = {171--178},

address = {Banff, Canada},

booktitle = {Proceedings of ACM Int'l Conf. Multimodal Interfaces (ICMI'06)},

month = {November},

title = {Gaze-X: Adaptive affective multimodal interface for single-user office scenarios},

year = {2006},

}Endnote reference [hide]%0 Conference Proceedings

%T Gaze-X: Adaptive affective multimodal interface for single-user office scenarios

%A Maat, L.

%A Pantic, M.

%B Proceedings of ACM Int?l Conf. Multimodal Interfaces (ICMI?06)

%D 2006

%8 November

%C Banff, Canada

%F Maat2006gaami

%P 171-178 -

Face for Ambient Interface

M. Pantic. Key Note Paper, Lecture Notes in Artificial Intelligence, Special Volume on Ambient Intelligence in Everyday Life. vol. 3864, pp. 35 - 66, 2006.

Bibtex reference [hide]@inbook{Pantic2006ffai,

author = {M. Pantic},

pages = {35--66},

booktitle = {Key Note Paper, Lecture Notes in Artificial Intelligence, Special Volume on Ambient Intelligence in Everyday Life},

title = {Face for Ambient Interface},

volume = {3864},

year = {2006},

}Endnote reference [hide]%0 Book Section

%A Pantic, M.

%B Key Note Paper, Lecture Notes in Artificial Intelligence, Special Volume on Ambient Intelligence in Everyday Life

%D 2006

%V 3864

%F Pantic2006ffai

%P 35-66 -

Towards an Affect-sensitive Multimodal Human-Computer Interaction

M. Pantic, L. Rothkrantz. Invited Paper, Proceedings of the IEEE, Special Issue on Multimodal Human-Computer Interaction (HCI). 91(9): pp. 1370 - 1390, 2003.

Bibtex reference [hide]@article{Pantic2003taamh,

author = {M. Pantic and L. Rothkrantz},

pages = {1370--1390},

journal = {Invited Paper, Proceedings of the IEEE, Special Issue on Multimodal Human-Computer Interaction (HCI)},

number = {9},

title = {Towards an Affect-sensitive Multimodal Human-Computer Interaction},

volume = {91},

year = {2003},

}Endnote reference [hide]%0 Journal Article

%T Towards an Affect-sensitive Multimodal Human-Computer Interaction

%A Pantic, M.

%A Rothkrantz, L.

%J Invited Paper, Proceedings of the IEEE, Special Issue on Multimodal Human-Computer Interaction (HCI)

%D 2003

%V 91

%N 9

%F Pantic2003taamh

%P 1370-1390 -

Affect-sensitive Multi-modal Monitoring in Ubiquitous Computing: Advances and Challenges

M. Pantic, L. Rothkrantz. Proceedings of AAAI / IEEE Int'l Conf. Enterprise Information Systems (ICEIS'01). Setubal, Portugal, pp. 466 - 474, July 2001.

Bibtex reference [hide]@inproceedings{Pantic2001ammiu,

author = {M. Pantic and L. Rothkrantz},

pages = {466--474},

address = {Setubal, Portugal},

booktitle = {Proceedings of AAAI / IEEE Int'l Conf. Enterprise Information Systems (ICEIS'01)},

month = {July},

title = {Affect-sensitive Multi-modal Monitoring in Ubiquitous Computing: Advances and Challenges},

year = {2001},

}Endnote reference [hide]%0 Conference Proceedings

%T Affect-sensitive Multi-modal Monitoring in Ubiquitous Computing: Advances and Challenges

%A Pantic, M.

%A Rothkrantz, L.

%B Proceedings of AAAI / IEEE Int?l Conf. Enterprise Information Systems (ICEIS?01)

%D 2001

%8 July

%C Setubal, Portugal

%F Pantic2001ammiu

%P 466-474