Audiovisual human behaviour data capture

Applications such as surveillance and human behaviour analysis require high-bandwidth recording from multiple cameras, as well as from other sensors. In turn, sensor fusion has increased the required accuracy of synchronisation between sensors. Using commercial off-the-shelf components may compromise quality and accuracy, because it is difficult to handle the combined data rate from multiple sensors, the offset and rate discrepancies between independent hardware clocks, the absence of trigger inputs or -outputs in the hardware, as well as the different methods for timestamping the recorded data.

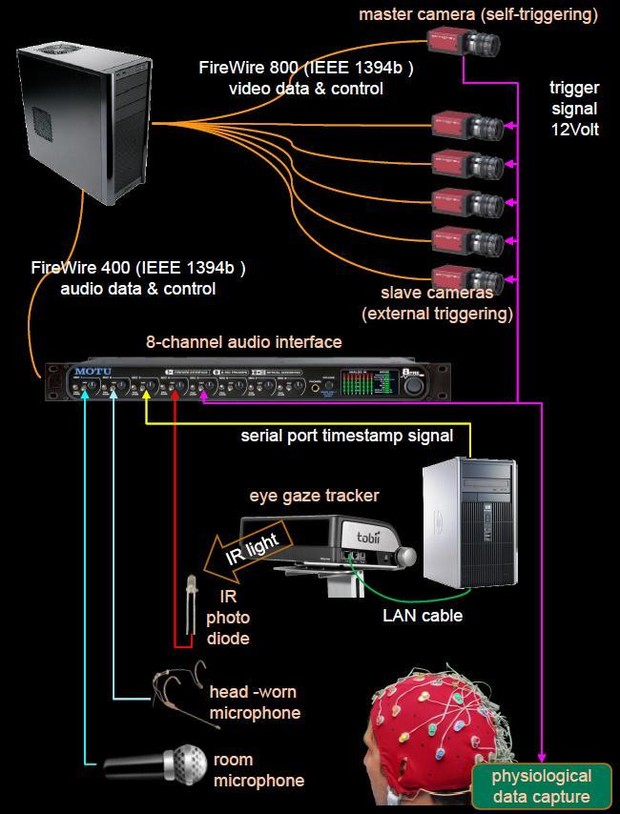

To achieve accurate synchronisation, we centralise the synchronisation task by recording all trigger- or timestamp signals with a multi-channel audio interface. The cameras are capturing video frames controlled by an external trigger signal, which is directly recorded alongside the audio signals from the microphones. For sensors that don't have an external trigger signal, such as a stand-alone eye gaze tracker we let the computer that captures the sensor data periodically generate timestamp signals from its serial port output. These signals can also be used as a common time base to synchronise multiple asynchronous audio interfaces. An infrared-sensitive photo diode measures the light flashes that the gaze tracker emits to capture frames. These can be used to synchronise the eye gaze data up to 20 microseconds accurate.

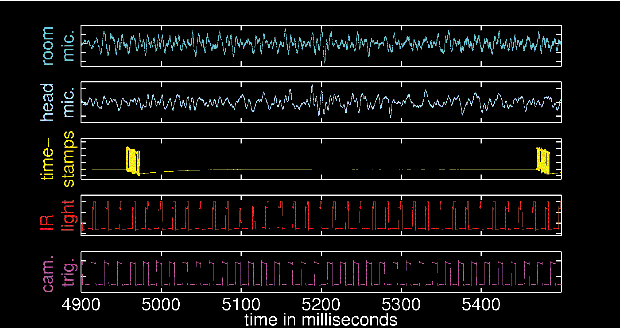

An example of a multi-channel recording with the audio interface is shown below:

Because all audio input channels are sampled simultaneously, the events in all channels can be accurately related to each other in time.

Furthermore, we have built capture PCs from low-cost consumer components that can capture 8-bit video data with 1024x1024 spatial- and 59.1Hz temporal resolution, from at least 14 cameras, together with 8 channels of 24-bit audio at 96kHz.

We currently have a multi-sensor capture setup for monitoring a human in situations of interacting with a computer, as shown at the top of this page. With the same method for sensor synchronisation, we can record physiological data such as heart-rate and EEG, accurately synchronized with audio, video and eye gaze direction.

Involved group members

Maja Pantic, Irene Kotsia, Konstantinos Bousmalis, Aaron C. Elkins, Sebastian Kaltwang, Dimitrios Kollias, Jeroen Lichtenauer, Yiming Lin, Yiming Lin, Yiming Lin

Related Publications

-

Cost-effective solution to synchronized audio-visual capture using multiple sensors

J. Lichtenauer, M. F. Valstar, J. Shen, M. Pantic. Proceedings of IEEE Int'l Conf. Advanced Video and Signal Based Surveillance (AVSS'09). Genoa, Italy, pp. 324 - 329, September 2009.

Bibtex reference [hide]@inproceedings{Lichtenauer2009cstsa,

author = {J. Lichtenauer and M. F. Valstar and J. Shen and M. Pantic},

pages = {324--329},

address = {Genoa, Italy},

booktitle = {Proceedings of IEEE Int'l Conf. Advanced Video and Signal Based Surveillance (AVSS'09)},

month = {September},

title = {Cost-effective solution to synchronized audio-visual capture using multiple sensors},

year = {2009},

}Endnote reference [hide]%0 Conference Proceedings

%T Cost-effective solution to synchronized audio-visual capture using multiple sensors

%A Lichtenauer, J.

%A Valstar, M. F.

%A Shen, J.

%A Pantic, M.

%B Proceedings of IEEE Int?l Conf. Advanced Video and Signal Based Surveillance (AVSS?09)

%D 2009

%8 September

%C Genoa, Italy

%F Lichtenauer2009cstsa

%P 324-329