Spatiotemporal Salient point detection

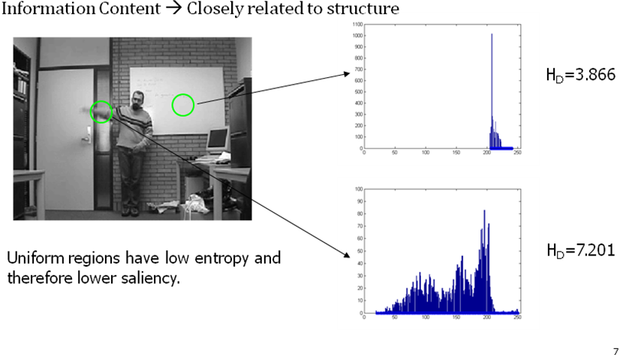

Spatiotemporal interest points have been successfully used for the representation of human activities in image sequences. In this part of our research, we propose the use of spatiotemporal salient points, by extending in the temporal direction the information- theoretic salient-feature detector developed of Kadir and Brady. Our goal is to obtain a sparse representation of a human action as a set of spatiotemporal points that correspond to activity-variation peaks. The proposed representation contains the spatiotemporal points at which there are peaks in activity variation such as the edges of a moving object.

The scales at which the entropy achieves local maxima is automatically detected. Each image sequence is then represented as a set of spatiotemporal salient points. We use the chamfer distance as an appropriate distance measure between two representations. In order to deal with different speeds in the execution of the actions and to achieve invariance against the subjects’ scaling, we propose a linear space–time-warping technique that linearly warps any two examples by minimizing their chamfer distance. A simple k-nearest neighbor (kNN) classifier and one based on relevance vector machines (RVMs) are used in order to test the efficiency of the representation. We test the proposed method using real image sequences, where we use aerobic exercises as our test domain.

Involved group members

Related Publications

-

Spatiotemporal salient points for visual recognition of human actions

A. Oikonomopoulos, I. Patras, M. Pantic. IEEE Transactions on Systems, Man and Cybernetics - Part B. 36(3): pp. 710 - 719, 2006.

Bibtex reference [hide]@article{Oikonomopoulos2006harws,

author = {A. Oikonomopoulos and I. Patras and M. Pantic},

pages = {710--719},

journal = {IEEE Transactions on Systems, Man and Cybernetics - Part B},

number = {3},

title = {Spatiotemporal salient points for visual recognition of human actions},

volume = {36},

year = {2006},

}Endnote reference [hide]%0 Journal Article

%T Spatiotemporal salient points for visual recognition of human actions

%A Oikonomopoulos, A.

%A Patras, I.

%A Pantic, M.

%J IEEE Transactions on Systems, Man and Cybernetics - Part B

%D 2006

%V 36

%N 3

%F Oikonomopoulos2006harws

%P 710-719 -

Kernel-based recognition of human actions using spatiotemporal salient points

A. Oikonomopoulos, I. Patras, M. Pantic. Proceedings of IEEE Int'l Conf. Computer Vision and Pattern Recognition (CVPR'06). New York, USA, 3: pp. 151, June 2006.

Bibtex reference [hide]@inproceedings{Oikonomopoulos2006kroha,

author = {A. Oikonomopoulos and I. Patras and M. Pantic},

pages = {151},

address = {New York, USA},

booktitle = {Proceedings of IEEE Int'l Conf. Computer Vision and Pattern Recognition (CVPR'06)},

month = {June},

title = {Kernel-based recognition of human actions using spatiotemporal salient points},

volume = {3},

year = {2006},

}Endnote reference [hide]%0 Conference Proceedings

%T Kernel-based recognition of human actions using spatiotemporal salient points

%A Oikonomopoulos, A.

%A Patras, I.

%A Pantic, M.

%B Proceedings of IEEE Int?l Conf. Computer Vision and Pattern Recognition (CVPR?06)

%D 2006

%8 June

%V 3

%C New York, USA

%F Oikonomopoulos2006kroha

%P 151 -

Spatiotemporal saliency for human action recognition

A. Oikonomopoulos, I. Patras, M. Pantic. Proceedings of IEEE Int'l Conf. Multimedia and Expo (ICME'05). Amsterdam, The Netherlands, pp. 430 - 433, July 2005.

Bibtex reference [hide]@inproceedings{Oikonomopoulos2005ssfha,

author = {A. Oikonomopoulos and I. Patras and M. Pantic},

pages = {430--433},

address = {Amsterdam, The Netherlands},

booktitle = {Proceedings of IEEE Int'l Conf. Multimedia and Expo (ICME'05)},

month = {July},

title = {Spatiotemporal saliency for human action recognition},

year = {2005},

}Endnote reference [hide]%0 Conference Proceedings

%T Spatiotemporal saliency for human action recognition

%A Oikonomopoulos, A.

%A Patras, I.

%A Pantic, M.

%B Proceedings of IEEE Int?l Conf. Multimedia and Expo (ICME?05)

%D 2005

%8 July

%C Amsterdam, The Netherlands

%F Oikonomopoulos2005ssfha

%P 430-433