MAHNOB-Implicit-Tagging database

Characterising multimedia content with relevant, reliable and discriminating tags is vital for multimedia information retrieval. With the rapid expansion of digital multimedia content, alternative methods to the existing explicit tagging are needed to enrich the pool of tagged content. Currently, social media websites encourage users to tag their content. However, the users’ intent when tagging multimedia content does not always match the information retrieval goals. A large portion of user defined tags are either motivated by increasing the popularity and reputation of a user in an online com-munity or based on individual and egoistic judgments [1]. Moreover, users do not evaluate media content on the same criteria. Some might tag multimedia content with words to express their emotion while others might use tags to describe the content. For example, a picture receive different tags based on the objects in the image, the camera by which the picture was taken or the emotion a user felt looking at the picture.

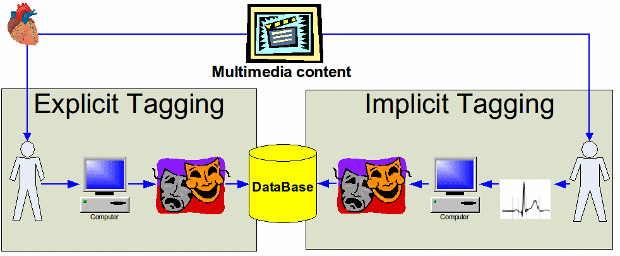

The principle of implicit tagging is to replace the user input by automatically finding descriptive tags for multimedia content, derived from an observer’s natural response. For instance, the emotions someone shows. An overview of this difference with explicit tagging is illustrated in the diagram above.

In order to facilitate research on this new area of multimedia tagging, we have recorded a database of user responses to multimedia content.

30 participants were shown fragments of movies and pictures, while monitoring them with 6 video cameras, a head-worn microphone, an eye gaze tracker, as well as physiological sensors measuring ECG, EEG (32 channels), respiration amplitude, and skin temperature.

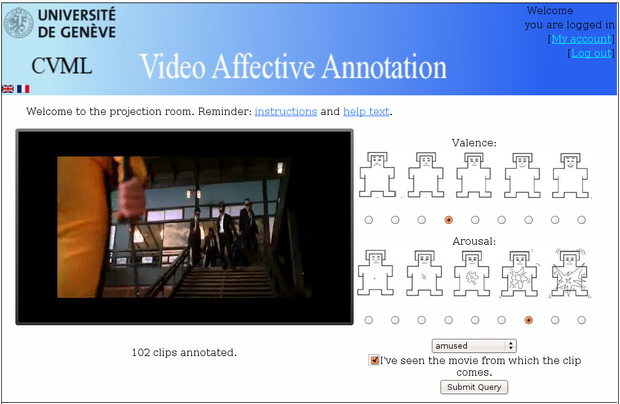

Each experiment consisted of two parts. In the first part, fragments of movies were shown, and a participant was asked to annotate their own emotive state after each fragment on a scale of valence and arousal, as shown below:

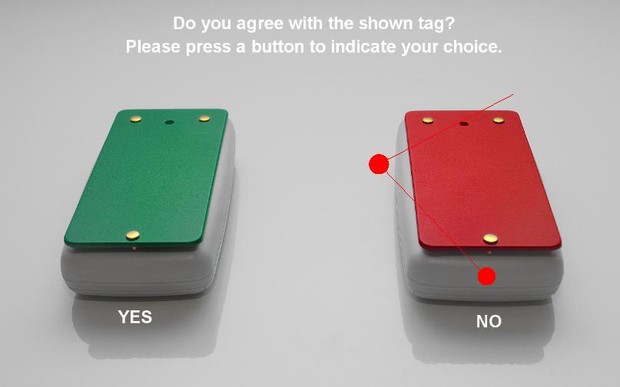

In the second part of the experiment, images or video fragments were shown together with a tag at the bottom of the screen. In some cases, the tag correctly described something about the situation. However, in other cases the tag did not actually apply to the media item. Below are two examples, overlayed with the eye tracking measurements as red dots and lines for gaze locations and shifts, respectively.

After each item, a participant was asked to press a green button if they agreed with the tag being applicable to the media item, or press a red button if not.

During the whole experiment, audio, video, gaze data and physiological data were recorded simultaneously with accurate synchronisation between sensors, as described in [1]. The database is freely available to the research community from http://mahnob-db.eu.

Involved group members

Related Publications

-

A multimodal database for affect recognition and implicit tagging

M. Soleymani, J. Lichtenauer, T. Pun, M. Pantic. IEEE Transactions on Affective Computing. 3: pp. 42 - 55, Issue 1. April 2012.

Bibtex reference [hide]@article{soleymani2011multimodal,

author = {M. Soleymani and J. Lichtenauer and T. Pun and M. Pantic},

pages = {42--55},

journal = {IEEE Transactions on Affective Computing},

month = {April},

note = {Issue 1},

title = {A multimodal database for affect recognition and implicit tagging},

volume = {3},

year = {2012},

}Endnote reference [hide]%0 Journal Article

%T A multimodal database for affect recognition and implicit tagging

%A Soleymani, M.

%A Lichtenauer, J.

%A Pun, T.

%A Pantic, M.

%J IEEE Transactions on Affective Computing

%D 2012

%8 April

%V 3

%F soleymani2011multimodal

%O Issue 1

%P 42-55 -

Cost-effective solution to synchronized audio-visual capture using multiple sensors

J. Lichtenauer, M. F. Valstar, J. Shen, M. Pantic. Proceedings of IEEE Int'l Conf. Advanced Video and Signal Based Surveillance (AVSS'09). Genoa, Italy, pp. 324 - 329, September 2009.

Bibtex reference [hide]@inproceedings{Lichtenauer2009cstsa,

author = {J. Lichtenauer and M. F. Valstar and J. Shen and M. Pantic},

pages = {324--329},

address = {Genoa, Italy},

booktitle = {Proceedings of IEEE Int'l Conf. Advanced Video and Signal Based Surveillance (AVSS'09)},

month = {September},

title = {Cost-effective solution to synchronized audio-visual capture using multiple sensors},

year = {2009},

}Endnote reference [hide]%0 Conference Proceedings

%T Cost-effective solution to synchronized audio-visual capture using multiple sensors

%A Lichtenauer, J.

%A Valstar, M. F.

%A Shen, J.

%A Pantic, M.

%B Proceedings of IEEE Int?l Conf. Advanced Video and Signal Based Surveillance (AVSS?09)

%D 2009

%8 September

%C Genoa, Italy

%F Lichtenauer2009cstsa

%P 324-329