Automatic Facial Expression Analysis

Facial expressions play a significant role in our social and emotional lives. They are visually observable, interactive signals that clarify our current focus of attention, regulate the conversation by gazing or nodding, clarify what has been said by lip movements, and display our affective states and intentions. Automating the analysis of facial expressions, would be highly beneficial for fields as diverse as security, behavioural science, medicine, communication, and education. In security contexts, facial expressions play a crucial role in establishing or detracting from credibility. In medicine, facial expressions are the direct means to identify when specific mental processes (e.g., pain, depression) are occurring. In education, pupils’ facial expressions inform the teacher of the need to adjust the instructional message. As far as natural interfaces between humans and computers (PCs / robots / machines) are concerned, facial expressions provide a way to communicate basic information about needs and demands to the machine.

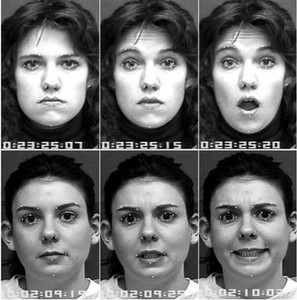

In contrast to other existing approaches to automated facial expression analysis, which deal only with prototypic facial expressions of emotions, our automated facial expression analyzer handles a large range of human facial behaviour by recognizing facial muscle actions (AUs, e.g., smile, frown, wink, etc.) that produce expressions. We developed various methods that can recognize a large number of AUs in either frontal or profile face image sequences.

The current focus of our research in the field is automatic distinction between spontaneous and deliberate facial behaviour (including deception recognition), facial-dynamics-based person identification, personality traits recognition, and context-sensitive interpretation of facial behaviour.

Individual Final Projects in this area of research consider the following topics:

Registration

To handle possible head rotations and variations in scale of the observed face, we should register each frame of the input image sequence with the first frame. There are different ways to do so, but none of the available methods performs well in the presence of large head and body motions.

Geometric Facial Feature Detection

As facial expressions (i.e., contractions of facial muscles) induce movements of the facial skin and changes in the position and appearance of facial components (e.g., mouth corners are pulled up in smile), a fundamental step in facial expression analysis is to extract geometric features (facial points, shapes of facial components). We have developed an automated Facial Characteristic Point Detector, which is a texture-based method (modeling local texture around a given facial point). This detector performs well only on images of expressionless faces. It is needed to enhance the detector to perform on images of expressive faces. Another important research effort that should be carried out in this area is to develop a texture- and shape-based method (regarding all facial points as a shape, which is learned from a set of labeled faces, and trying to find the proper shape for any unknown face).

Transient Facial Feature Detection

Facial expressions (i.e., contractions of facial muscles) induce movements of the facial skin and changes in facial transient features (e.g., crow-feet wrinkles around the eyes deepen in genuine smile). A basic step in facial expression analysis is to extract appearance features (texture of the facial skin like wrinkles and furrows). Methods like edge detectors, Gabor wavelets, and texture-based deformable 3D models can prove suitable for realizing Transient Facial Feature Detectors.

Comparison of Geometric and Appearance Features for AU recognition

Most of the existing systems for AU recognition are either feature-based (i.e., use geometric features like facial points or shapes of facial components) or appearance-based (i.e., use texture of the facial skin including wrinkles and furrows) or hybrid (using both geometric and appearance facial features). It is not clear however, which kind of features is better for recognition of which AU. More specifically, it is not clear which facial features will result in the best recognition results for a certain AU, independently of the utilized classification scheme (like Support Vector Machines, Decision Trees, or Neural Networks).

Deception Detection

The focus of the research in this field is on spotting the differences between facial behaviour shown when somebody is lying and that shown when that person is telling the truth and using this knowledge to develop deception detector. No research has been conducted yet in this field and there are no readily available datasets that can be used for the study. The data should be first acquired and annotated (in terms of activated AUs).

Contact:

Dr. Maja Pantic

Computing Department

Imperial College London

e-mail: m.pantic@imperial.ac.uk

website: http://www.doc.ic.ac.uk/~maja/